25. Linear State Space Models#

Contents

“We may regard the present state of the universe as the effect of its past and the cause of its future” – Marquis de Laplace

25.1. Overview#

This lecture introduces the linear state space dynamic system.

This model is a workhorse that carries a powerful theory of prediction.

Its many applications include:

representing dynamics of higher-order linear systems

predicting the position of a system

predicting a geometric sum of future values of a variable like

non financial income

dividends on a stock

the money supply

a government deficit or surplus, etc.

key ingredient of useful models

Friedman’s permanent income model of consumption smoothing

Barro’s model of smoothing total tax collections

Rational expectations version of Cagan’s model of hyperinflation

Sargent and Wallace’s “unpleasant monetarist arithmetic,” etc.

using LinearAlgebra, Statistics

25.2. The Linear State Space Model#

The objects in play are:

An

An iid sequence of

A

An

An

A

Here is the linear state-space system

25.2.1. Primitives#

The primitives of the model are

the matrices

shock distribution, which we have specialized to

the distribution of the initial condition

Given

Even without these draws, the primitives 1–3 pin down the probability distributions of

Later we’ll see how to compute these distributions and their moments.

25.2.1.1. Martingale difference shocks#

We’ve made the common assumption that the shocks are independent standardized normal vectors.

But some of what we say will be valid under the assumption that

A martingale difference sequence is a sequence that is zero mean when conditioned on past information.

In the present case, since

This is a weaker condition than that

25.2.2. Examples#

By appropriate choice of the primitives, a variety of dynamics can be represented in terms of the linear state space model.

The following examples help to highlight this point.

They also illustrate the wise dictum finding the state is an art.

25.2.2.1. Second-order difference equation#

Let

To map (25.2) into our state space system (25.1), we set

You can confirm that under these definitions, (25.1) and (25.2) agree.

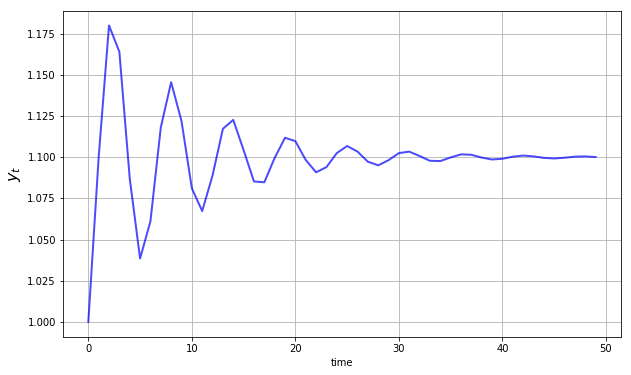

The next figure shows dynamics of this process when

Later you’ll be asked to recreate this figure.

25.2.2.2. Univariate Autoregressive Processes#

We can use (25.1) to represent the model

where

To put this in the linear state space format we take

The matrix

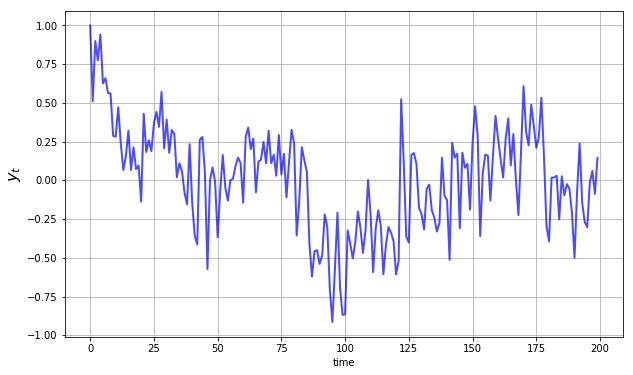

The next figure shows dynamics of this process when

25.2.2.3. Vector Autoregressions#

Now suppose that

Then (25.3) is termed a vector autoregression.

To map this into (25.1), we set

where

25.2.2.4. Seasonals#

We can use (25.1) to represent

the deterministic seasonal

the indeterministic seasonal

In fact both are special cases of (25.3).

With the deterministic seasonal, the transition matrix becomes

It is easy to check that

Such an

The indeterministic seasonal produces recurrent, but aperiodic, seasonal fluctuations.

25.2.2.5. Time Trends#

The model

We can represent this model in the linear state space form by taking

and starting at initial condition

In fact it’s possible to use the state-space system to represent polynomial trends of any order.

For instance, let

It follows that

Then

25.2.3. Moving Average Representations#

A nonrecursive expression for

Representation (25.5) is a moving average representation.

It expresses

current and past values of the process

the initial condition

As an example of a moving average representation, let the model be

You will be able to show that

Substituting into the moving average representation (25.5), we obtain

where

The first term on the right is a cumulated sum of martingale differences, and is therefore a martingale.

The second term is a translated linear function of time.

For this reason,

25.3. Distributions and Moments#

25.3.1. Unconditional Moments#

Using (25.1), it’s easy to obtain expressions for the

(unconditional) means of

We’ll explain what unconditional and conditional mean soon.

Letting

Here

The variance-covariance matrix of

Using

As with

As a matter of terminology, we will sometimes call

This is to distinguish

However, you should be aware that these “unconditional” moments do depend on

the initial distribution

25.3.1.1. Moments of the Observations#

Using linearity of expectations again we have

The variance-covariance matrix of

25.3.2. Distributions#

In general, knowing the mean and variance-covariance matrix of a random vector is not quite as good as knowing the full distribution.

However, there are some situations where these moments alone tell us all we need to know.

These are situations in which the mean vector and covariance matrix are sufficient statistics for the population distribution.

(Sufficient statistics form a list of objects that characterize a population distribution)

One such situation is when the vector in question is Gaussian (i.e., normally distributed).

This is the case here, given

our Gaussian assumptions on the primitives

the fact that normality is preserved under linear operations

In fact, it’s well-known that

In particular, given our Gaussian assumptions on the primitives and the

linearity of (25.1) we can see immediately that both

Since

But in fact we’ve already done this, in (25.6) and (25.7).

Letting

By similar reasoning combined with (25.8) and (25.9),

25.3.3. Ensemble Interpretations#

How should we interpret the distributions defined by (25.11)–(25.12)?

Intuitively, the probabilities in a distribution correspond to relative frequencies in a large population drawn from that distribution.

Let’s apply this idea to our setting, focusing on the distribution of

We can generate independent draws of

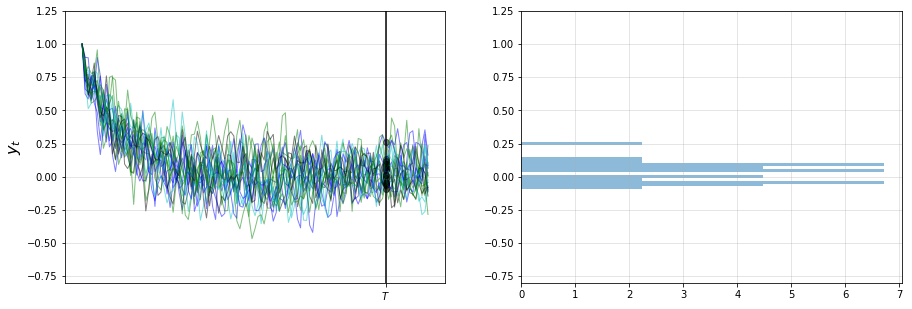

The next figure shows 20 simulations, producing 20 time series for

The system in question is the univariate autoregressive model (25.3).

The values of

In the right-hand figure, these values are converted into a rotated histogram

that shows relative frequencies from our sample of 20

(The parameters and source code for the figures can be found in file linear_models/paths_and_hist.jl)

Here is another figure, this time with 100 observations

Let’s now try with 500,000 observations, showing only the histogram (without rotation)

The black line is the population density of

The histogram and population distribution are close, as expected.

By looking at the figures and experimenting with parameters, you will gain a feel for how the population distribution depends on the model primitives listed above, as intermediated by the distribution’s sufficient statistics.

25.3.3.1. Ensemble means#

In the preceding figure we approximated the population distribution of

generating

recording each observation

histogramming this sample

Just as the histogram approximates the population distribution, the ensemble or cross-sectional average

approximates the expectation

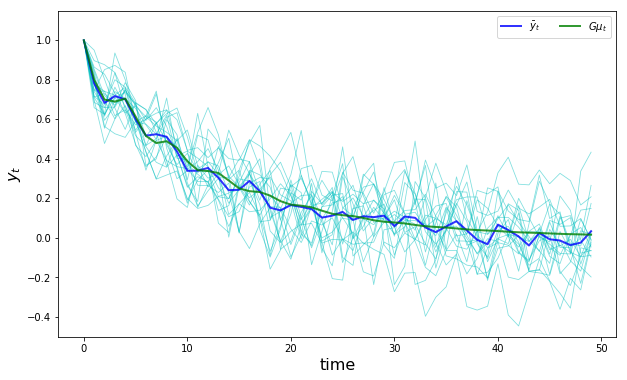

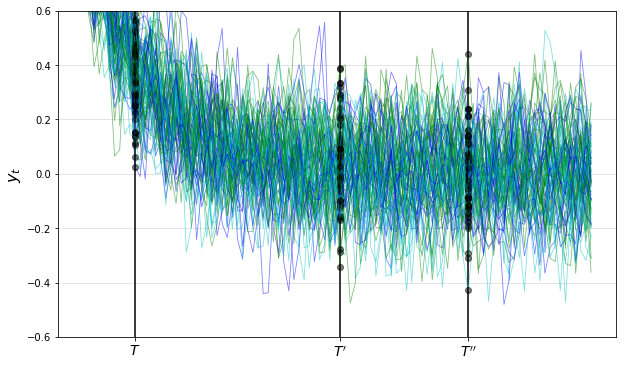

Here’s a simulation comparing the ensemble averages and population means at time points

The parameters are the same as for the preceding figures,

and the sample size is relatively small (

The ensemble mean for

The limit

(By long-run average we mean the average for an infinite (

Another application of the law of large numbers assures us that

25.3.4. Joint Distributions#

In the preceding discussion we looked at the distributions of

This gives us useful information, but doesn’t allow us to answer questions like

what’s the probability that

what’s the probability that the process

etc., etc.

Such questions concern the joint distributions of these sequences.

To compute the joint distribution of

From this rule we get

The Markov property

The marginal

In view of (25.1), the conditional densities are

25.3.4.1. Autocovariance functions#

An important object related to the joint distribution is the autocovariance function

Elementary calculations show that

Notice that

25.4. Stationarity and Ergodicity#

Stationarity and ergodicity are two properties that, when they hold, greatly aid analysis of linear state space models.

Let’s start with the intuition.

25.4.1. Visualizing Stability#

Let’s look at some more time series from the same model that we analyzed above.

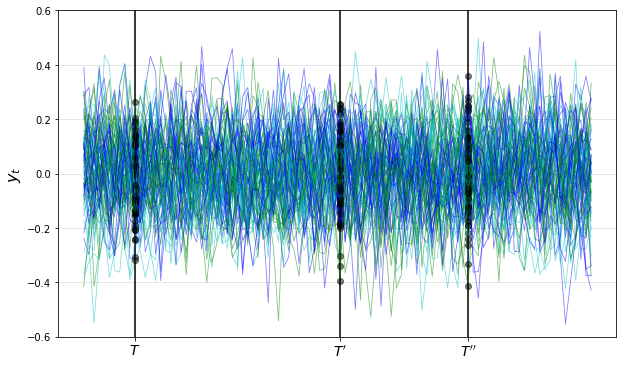

This picture shows cross-sectional distributions for

Note how the time series “settle down” in the sense that the distributions at

Apparently, the distributions of

When such a distribution exists it is called a stationary distribution.

25.4.2. Stationary Distributions#

In our setting, a distribution

Since

in the present case all distributions are Gaussian

a Gaussian distribution is pinned down by its mean and variance-covariance matrix

we can restate the definition as follows:

where

25.4.3. Covariance Stationary Processes#

Let’s see what happens to the preceding figure if we start

Now the differences in the observed distributions at

By

we’ve ensured that

Moreover, in view of (25.14), the autocovariance function takes the form

This motivates the following definition.

A process

both

In our setting,

25.4.4. Conditions for Stationarity#

25.4.4.1. The globally stable case#

The difference equation

That is, if all(abs(eigvals(A)) .< 1) == true.

The difference equation (25.7) also has a unique fixed point in this case, and, moreover

regardless of the initial conditions

This is the globally stable case — see these notes for more a theoretical treatment

However, global stability is more than we need for stationary solutions, and often more than we want.

To illustrate, consider our second order difference equation example.

Here the state is

Because of the constant first component in the state vector, we will never have

How can we find stationary solutions that respect a constant state component?

25.4.4.2. Processes with a constant state component#

To investigate such a process, suppose that

where

Let

It follows that

Let

Assume now that the moduli of the eigenvalues of

Then (25.15) has a unique stationary solution, namely,

The stationary value of

The stationary values of

Notice that here

In conclusion, if

the moduli of the eigenvalues of

then the

Note

If the eigenvalues of

25.4.5. Ergodicity#

Let’s suppose that we’re working with a covariance stationary process.

In this case we know that the ensemble mean will converge to

25.4.5.1. Averages over time#

Ensemble averages across simulations are interesting theoretically, but in real life we usually observe only a single realization

So now let’s take a single realization and form the time series averages

Do these time series averages converge to something interpretable in terms of our basic state-space representation?

The answer depends on something called ergodicity.

Ergodicity is the property that time series and ensemble averages coincide.

More formally, ergodicity implies that time series sample averages converge to their expectation under the stationary distribution.

In particular,

In our linear Gaussian setting, any covariance stationary process is also ergodic.

25.5. Noisy Observations#

In some settings the observation equation

Often this error term represents the idea that the true state can only be observed imperfectly.

To include an error term in the observation we introduce

An iid sequence of

A

and extend the linear state-space system to

The sequence

The process

The unconditional moments of

The variance-covariance matrix of

The distribution of

25.6. Prediction#

The theory of prediction for linear state space systems is elegant and simple.

25.6.1. Forecasting Formulas – Conditional Means#

The natural way to predict variables is to use conditional distributions.

For example, the optimal forecast of

The right-hand side follows from

That

The one-step-ahead forecast error is

The covariance matrix of the forecast error is

More generally, we’d like to compute the

With a bit of algebra we obtain

In view of the iid property, current and past state values provide no information about future values of the shock.

Hence

It now follows from linearity of expectations that the

The

25.6.2. Covariance of Prediction Errors#

It is useful to obtain the covariance matrix of the vector of

Evidently,

Under particular conditions,

Equation (25.23) is an example of a discrete Lyapunov equation in the covariance matrix

A sufficient condition for

Weaker sufficient conditions for convergence associate eigenvalues equaling or exceeding one in modulus with elements of

25.6.3. Forecasts of Geometric Sums#

In several contexts, we want to compute forecasts of geometric sums of future random variables governed by the linear state-space system (25.1).

We want the following objects

Forecast of a geometric sum of future

Forecast of a geometric sum of future

These objects are important components of some famous and interesting dynamic models.

For example,

if

if

25.6.3.1. Formulas#

Fortunately, it is easy to use a little matrix algebra to compute these objects.

Suppose that every eigenvalue of

It then follows that

This leads to our formulas:

Forecast of a geometric sum of future

Forecast of a geometric sum of future

25.7. Code#

Our preceding simulations and calculations are based on code in the file lss.jl from the QuantEcon.jl package.

The code implements a type which the linear state space models can act on directly through specific methods (for simulations, calculating moments, etc.).

Examples of usage are given in the solutions to the exercises.

25.8. Exercises#

25.8.1. Exercise 1#

Replicate this figure using the LSS type from lss.jl.

25.8.2. Exercise 2#

Replicate this figure modulo randomness using the same type.

25.8.3. Exercise 3#

Replicate this figure modulo randomness using the same type.

The state space model and parameters are the same as for the preceding exercise.

25.8.4. Exercise 4#

Replicate this figure modulo randomness using the same type.

The state space model and parameters are the same as for the preceding exercise, except that the initial condition is the stationary distribution.

Hint: You can use the stationary_distributions method to get the initial conditions.

The number of sample paths is 80, and the time horizon in the figure is 100.

Producing the vertical bars and dots is optional, but if you wish to try, the bars are at dates 10, 50 and 75.

25.9. Solutions#

using LaTeXStrings, QuantEcon, Plots

25.9.1. Exercise 1#

phi0, phi1, phi2 = 1.1, 0.8, -0.8

A = [1.0 0.0 0

phi0 phi1 phi2

0.0 1.0 0.0]

C = zeros(3, 1)

G = [0.0 1.0 0.0]

mu_0 = ones(3)

lss = LSS(A, C, G; mu_0)

x, y = simulate(lss, 50)

plot(dropdims(y, dims = 1), color = :blue, linewidth = 2, alpha = 0.7)

plot!(xlabel = "time", ylabel = L"y_t", legend = :none)

25.9.2. Exercise 2#

using Random

Random.seed!(42) # For deterministic results.

phi1, phi2, phi3, phi4 = 0.5, -0.2, 0, 0.5

sigma = 0.2

A = [phi1 phi2 phi3 phi4

1.0 0.0 0.0 0.0

0.0 1.0 0.0 0.0

0.0 0.0 1.0 0.0]

C = [sigma

0.0

0.0

0.0]

G = [1.0 0.0 0.0 0.0]

ar = LSS(A, C, G; mu_0 = ones(4))

x, y = simulate(ar, 200)

plot(dropdims(y, dims = 1), color = :blue, linewidth = 2, alpha = 0.7)

plot!(xlabel = "time", ylabel = L"y_t", legend = :none)

25.9.3. Exercise 3#

phi1, phi2, phi3, phi4 = 0.5, -0.2, 0, 0.5

sigma = 0.1

A = [phi1 phi2 phi3 phi4

1.0 0.0 0.0 0.0

0.0 1.0 0.0 0.0

0.0 0.0 1.0 0.0]

C = [sigma

0.0

0.0

0.0]

G = [1.0 0.0 0.0 0.0]

I = 20

T = 50

ar = LSS(A, C, G; mu_0 = ones(4))

ymin, ymax = -0.5, 1.15

ensemble_mean = zeros(T)

ys = []

for i in 1:I

x, y = simulate(ar, T)

y = dropdims(y, dims = 1)

push!(ys, y)

ensemble_mean .+= y

end

ensemble_mean = ensemble_mean ./ I

plot(ys, color = :blue, alpha = 0.2, linewidth = 0.8, label = "")

plot!(ensemble_mean, color = :blue, linewidth = 2, label = L"\overline{y_t}")

m = moment_sequence(ar)

pop_means = zeros(0)

for (i, t) in enumerate(m)

(mu_x, mu_y, Sigma_x, Sigma_y) = t

push!(pop_means, mu_y[1])

i == 50 && break

end

plot!(pop_means, color = :green, linewidth = 2, label = L"G \mu_t")

plot!(ylims = (ymin, ymax), xlabel = "time", ylabel = L"y_t", legendfont = font(12))

25.9.4. Exercise 4#

phi1, phi2, phi3, phi4 = 0.5, -0.2, 0, 0.5

sigma = 0.1

A = [phi1 phi2 phi3 phi4

1.0 0.0 0.0 0.0

0.0 1.0 0.0 0.0

0.0 0.0 1.0 0.0]

C = [sigma

0.0

0.0

0.0]

G = [1.0 0.0 0.0 0.0]

T0 = 10

T1 = 50

T2 = 75

T4 = 100

ar = LSS(A, C, G; mu_0 = ones(4))

ymin, ymax = -0.6, 0.6

mu_x, mu_y, Sigma_x, Sigma_y = stationary_distributions(ar)

ar = LSS(A, C, G; mu_0 = mu_x, Sigma_0 = Sigma_x)

colors = ["c", "g", "b"]

ys = []

x_scatter = []

y_scatter = []

for i in 1:80

rcolor = colors[rand(1:3)]

x, y = simulate(ar, T4)

y = dropdims(y, dims = 1)

push!(ys, y)

x_scatter = [x_scatter; T0; T1; T2]

y_scatter = [y_scatter; y[T0]; y[T1]; y[T2]]

end

plot(ys, linewidth = 0.8, alpha = 0.5)

plot!([T0 T1 T2; T0 T1 T2], [-1 -1 -1; 1 1 1], color = :black, legend = :none)

scatter!(x_scatter, y_scatter, color = :black, alpha = 0.5)

plot!(ylims = (ymin, ymax), ylabel = L"y_t", xticks = [], yticks = ymin:0.2:ymax)

plot!(annotations = [(T0 + 1, -0.55, L"T"); (T1 + 1, -0.55, L"T^\prime");

(T2 + 1, -0.55, L"T^{\prime\prime}")])